AMD and Nvidia are competing for the title of company that makes the best neural network (Neural Processing Unit (NPU)) accelerator chips. With the launch of its new MI300X AI accelerator, AMD has claimed that it can match or even exceed the performance of Nvidia's H100 chip by up to 1.6x. But Nvidia didn't like the comparison and responded with benchmarks that indicated that its H100 chip performed significantly better than the MI300X when evaluated, taking its optimizations into account. AMD has just published a new response suggesting that its MI300X chip has 30% higher performance than the H100.

MI300X vs. H100: AMD and Nvidia each defend the superiority of their AI chip

A neural processing unit is a microprocessor specialized in accelerating machine learning algorithms, usually by working with predictive models such as artificial neural networks (ANNs) or random forests. ). It is also known as a neural processor or AI accelerator. These AI processors have seen real growth in recent years due to the ever-increasing computing needs of AI companies and the introduction of large language models (LLM). So far, Nvidia has largely dominated the market.

However, the Santa Clara company is increasingly being pursued by its rival AMD. In order to reduce the gap to Nvidia and AMD a little

At least there is a new AI accelerator called Instinct MI300X. AMD CEO Lisa Su and colleagues demonstrated the MI300X's performance by comparing it to the inference performance of Nvidia's H100 using Llama 2. According to the comparison, a single AMD server consisting of eight MI300X would be 1.6 times faster than an H100 server. But Nvidia didn't appreciate the comparison and denied it. In a blog post published in response to AMD's benchmarks, Nvidia criticized its competitor's results.

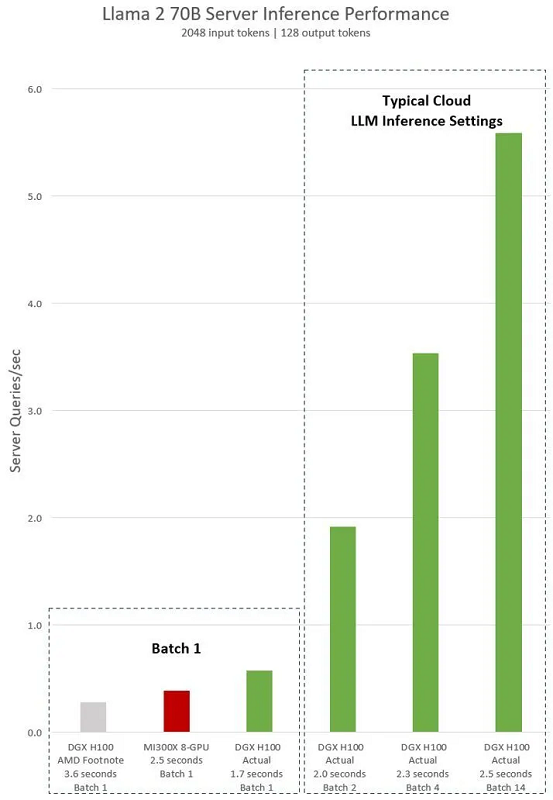

Contrary to AMD's presentation, Nvidia claims that its H100 chip, when properly evaluated with optimized software, significantly outperforms the MI300X. Nvidia claimed that AMD did not take into account its optimizations compared to TensorRT-LLM. Developed by Nvidia, TensorRT-LLM is a toolbox for assembling optimized solutions for large language model inference. In its article, Nvidia compared a single H100 with eight-way H100 GPUs running the Cat model Llama 2 70B. The results obtained are surprising.

The results, obtained using software prior to AMD's presentation, showed twice as fast performance at a batch size of 1. Additionally, when applying the default latency of 2.5 seconds used by AMD, Nvidia emerged as the clear leader, outperforming the MI300X by a wide margin Factor 14. How is this possible? It's easy. AMD did not use Nvidia's software, which is optimized to improve performance on Nvidia hardware. The Santa Clara company says AMD used alternative software that doesn't support the H100 chip's Transformer engine (Hopper).

Although TensorRT-LLM is available for free on GitHub, AMD's recent benchmarks used alternative software that does not yet support Hopper's Transformer engine and does not have these optimizations, Nvidia says. Additionally, AMD did not use the TensorRT-LLM software released by Nvidia in September, which doubles the inference performance on LLMs, nor the Triton inference engine. Therefore, the absence of TensorRT-LLM, Transformer Engine and Triton resulted in sub-optimal performance. According to critics, AMD thought this was a better move since it doesn't have the appropriate software.

AMD releases new metrics showing MI300X is superior to H100

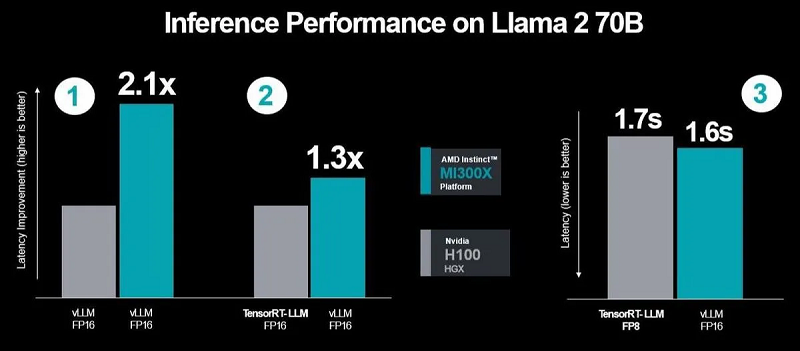

Surprisingly, AMD responded to Nvidia's challenge with new performance measurements of its MI300X chip, showing a 30% performance increase over the H100 chip, even with a fine-tuned software stack. AMD mirrored Nvidia's testing conditions with TensorRT-LLM and took a proactive approach by considering latency, a common factor in server workloads. AMD emphasized the key points of its argument, particularly emphasizing the advantages of FP16 with vLLM compared to FP8, which is proprietary TensorRT-LLM.

AMD claimed that Nvidia used a selective set of inference workloads. The company also said that Nvidia used its own TensorRT-LLM on H100 instead of vLLM, a widely used open source method. Additionally, Nvidia used the vLLM FP16 performance data type on AMD and compared its results to DGX-H100, which used TensorRT-LLM with the FP8 data type to show these allegedly misinterpreted results. AMD emphasized that it used vLLM with the FP16 dataset in its testing due to its widespread use and that vLLM does not support FP8.

Another point of contention between the two companies concerns latency in server environments. AMD criticizes Nvidia for focusing solely on throughput performance without addressing real-world latency issues. To counteract Nvidia's testing methodology, AMD ran three benchmarks using Nvidia's TensorRT-LLM toolkit, with the final test specifically measuring latency between MI300X and vLLM using the FP16 versus H100 dataset. with TensorRT-LLM. AMD's new tests showed improved performance and lower latency.

AMD made additional optimizations, resulting in a 2.1x performance increase over H100 when running vLLM on both platforms. It is now up to Nvidia to consider how it wants to respond. However, the company must also recognize that this would force the industry to abandon FP16 with the closed system of TensorRT-LLM to use FP8, which would mean abandoning vLLM forever.

The market for AI hardware is developing very quickly and competition is intensifying

The competition between Nvidia and AMD has been going on for a long time. What is interesting, however, is that for the first time Nvidia has decided to directly compare the performance of its products with those of AMD. This clearly shows that competition in this area is intensifying. Furthermore, the two flea giants are not the only ones trying to gain a foothold in the market. Others, like Cerebras Systems and Intel, are also working on it. Intel CEO Pat Gelsinger announced the Gaudi3 AI chip at his latest AI Everywhere event. However, very little information has been revealed about this processor.

Likewise, the H100 will soon no longer be relevant. Nvidia will introduce the GH200 chips early next year, which will succeed the H100. AMD didn't compare its new chips with the latter, but with the H100. It is obvious that the performance of the new GH200 chip will be higher than previous chips. Because the competition is so fierce, AMD could be treated as a backup option by many companies, including Meta, Microsoft and Oracle. In this regard, Microsoft and Meta recently announced that they are considering integrating AMD chips into their data centers.

Gelsinger predicted that the GPU market will be about $400 billion by 2027, so there is room for plenty of competitors. For his part, Cerebras CEO Andrew Feldman denounced Nvidia's alleged monopolistic practices during the Global AI Conclave event. We spend our time looking for ways to be better than Nvidia. “By next year, we will build 36 exaflops of computing power for AI,” he said of the company’s plans. Feldman is also reportedly in talks with the Indian government to advance AI computing in the country.

The company also signed a $100 million contract for an AI supercomputer with G42, an AI startup in the United Arab Emirates, where Nvidia is not allowed to operate. As for the tug-of-war between Nvidia and AMD, reports indicate that the MI300X's FLOP specs are better than the Nvidia H100 and the MI300X also has more HBM memory. However, it takes optimized software to power an AI chip and convert that power and bytes into value for the customer. AMD ROCm software has made significant progress, but AMD still has a long way to go, one reviewer notes.

Another welcomes the intensification of the rivalry between AMD and Nividia: It's great to see AMD competing with Nvidia. Everyone will benefit, probably including Nvidia, which can't produce enough GPUs to meet market demand and is less inclined to rest on its laurels.

Sources: Nvidia, AMD

And you ?

![]() What is your opinion on this topic?

What is your opinion on this topic?

![]() What do you think of AMD's MI300X and Nvidia's H100 chips?

What do you think of AMD's MI300X and Nvidia's H100 chips?

![]() What comparisons do you make between the two AI accelerators?

What comparisons do you make between the two AI accelerators?

![]() In your opinion, will AMD's MI300X chip succeed in establishing itself in the market?

In your opinion, will AMD's MI300X chip succeed in establishing itself in the market?

![]() Do you think Nvidia can once again set itself apart from the competition with the H200 chip?

Do you think Nvidia can once again set itself apart from the competition with the H200 chip?

![]() Will Cerebras and Intel be able to eclipse Nvidia in the GPU market in the near future?

Will Cerebras and Intel be able to eclipse Nvidia in the GPU market in the near future?

![]() What do you think about the allegations that Nvidia is using antitrust practices to maintain its monopoly?

What do you think about the allegations that Nvidia is using antitrust practices to maintain its monopoly?

See also

![]() AMD announces Instinct MI300, generative AI accelerators and data center APUs that deliver up to 1.3x improved performance in AI workloads

AMD announces Instinct MI300, generative AI accelerators and data center APUs that deliver up to 1.3x improved performance in AI workloads

![]() Meta and Microsoft announce that they will buy AMD's new AI chip to replace Nvidia's

Meta and Microsoft announce that they will buy AMD's new AI chip to replace Nvidia's

![]() AMD is acquiring Nod.ai, an artificial intelligence software startup, to strengthen its software capabilities and catch up with Nvidia

AMD is acquiring Nod.ai, an artificial intelligence software startup, to strengthen its software capabilities and catch up with Nvidia