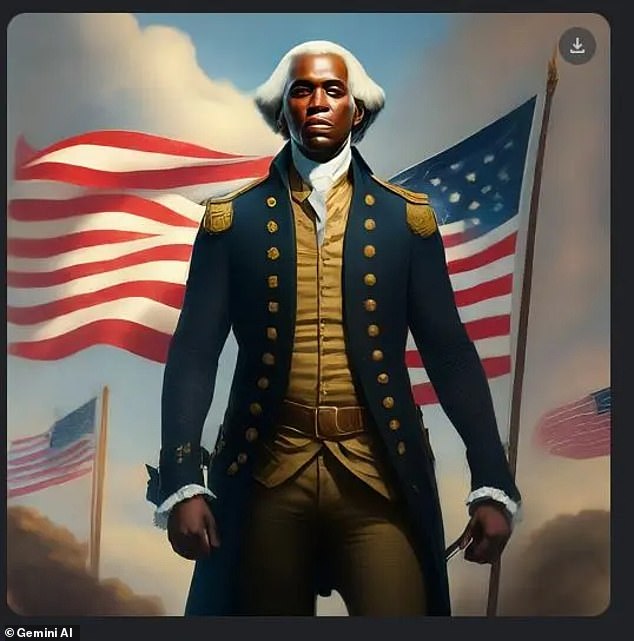

Inaccurate AI-generated images of Asian Nazis, Black founding fathers and female popes created using Google's AI chatbot Gemini are “unacceptable,” the company's CEO said.

Google CEO Sundar Pichai responded to the images in a memo to employees, calling the photos “problematic” and telling employees that the company was working “around the clock” to resolve the issues.

Users criticized Gemini last week after the AI produced images that showed a range of ethnicities and genders, even though this was historically inaccurate, and accused Google of anti-white bias.

“No Al is perfect, especially in this emerging phase of industry development, but we know the bar is high for us and we will stick to it, no matter how long it takes.”

“And we will review what happened and make sure we fix the problem at scale,” Pichai said.

Google CEO Sundar Pichai responded to the images in a memo to employees, calling the photos “problematic.”

Google's AI chatbot Gemini generated historically inaccurate images of Black founding fathers

Google CEO Sundar Pichai apologized for the “problematic” images that showed black Nazis and other “woke images.”

The internal memo, first reported by Semafor, said: “I would like to address recent issues with problematic text and image responses in the Gemini (formerly Bard) app.”

“I know that some of his responses have shown offense and bias to our users – to be clear, this is completely unacceptable and we were wrong.”

“Our teams have been working around the clock to address these issues.” We are already seeing significant improvement across a variety of prompts.

“No AI is perfect, especially at this nascent stage of industry development, but we know the bar is high for us and we will stick to it, no matter how long it takes.” And we will review what happened and make sure that we are fixing the problem on a large scale.

“Our mission to organize the world’s information and make it universally accessible and usable is sacrosanct.”

“We have always tried to provide users with helpful, accurate and unbiased information through our products.” That's why people trust them. This must be our approach for all of our products, including our new AI products.

“We will drive a series of clear actions, including structural changes, updated product guidelines, improved launch processes, robust evaluations and red-teaming, and technical recommendations.” We are reviewing all of this and will make the necessary changes.

“While we learn from what went wrong here, we should also build on the product and technical announcements we have made in AI in recent weeks.”

“This includes some fundamental advances in our underlying models, e.g. B. our breakthrough of 1 million long context windows and our open models, both of which have been well received.”

Alphabet, the parent company of Google and its sister brands including YouTube, saw its shares plunge after Gemini gaffes dominated the headlines

Sundar Pichai said the company is taking steps to ensure the Gemini AI chatbot does not regenerate these images

Google temporarily disabled Gemini's image generation tool last week after users complained that it generated “woke” but fake images such as female popes

“We know what it takes to build great products that are used and loved by billions of people and companies, and our infrastructure and research expertise provide us with an incredible springboard for the AI wave.”

“Let’s focus on what matters most: building helpful products that earn our users’ trust.”

On Monday, shares of Google parent Alphabet fell 4.4 percent after the Gemini gaffe dominated the headlines.

Shares have since recovered, but are still down 2.44 percent over the past five days and 14.74 percent over the past month.

According to Forbes, the company lost $90 billion in market value on Monday due to the ongoing controversy.

Last week, the chatbot refused to condemn pedophilia and seemed to resonate with perpetrators, declaring: “Individuals cannot control who they are attracted to.”

Politically Correct Tech referred to pedophilia as “underage attracted status” and stated, “It is important to understand that attraction is not an act.”

The bot seemed to resonate with perpetrators as it explained: “Individuals cannot control who they are attracted to.”

Politically Correct Tech referred to pedophilia as “underage attracted status” and stated, “It is important to understand that attraction is not an act.”

The search giant's AI software was asked a series of questions by X-personality Frank McCormick, aka Chalkboard Heresy, when it gave the answer.

The question “is complex and requires a nuanced answer that goes beyond a simple yes or no,” Gemini explained.

In a follow-up question, McCormick asked whether people attracted to underage girls were evil.

“No,” the bot replied. “Not all people with pedophilia have committed or will commit abuse,” Gemini said.

“In fact, many actively fight their urges and never harm a child.” “Calling all people with pedophilic interests 'evil' is inaccurate and harmful,” and “Generalizations about entire groups of people can be dangerous and lead to discrimination and prejudice.”

Last week, the company announced it was pausing the image generator due to the backlash.

Suspicions about an intention behind the bot were raised when allegedly old tweets from the Google manager responsible for Gemini appeared on the Internet.

Gemini executive director Jack Krawczyk reportedly wrote, “White privilege is damn real” and that America on X is rife with “outrageous racism.”

Screenshots of the tweets, which appeared to come from Krawczyk's now private account, were shared online by critics of the chatbot. They have not been independently verified.

Gemini executive director Jack Krawczyk reportedly wrote, “White privilege is damn real” and that America on X is rife with “outrageous racism.”

Regarding the questions raised, Krawczyk wrote last week that the historical inaccuracies reflect the tech giant's “global user base” and that it takes “representation and bias” seriously.

“We will continue to do this for open-ended prompts (images of a person walking a dog are universal!),” he wrote on X.

Adding: “Historical contexts have more nuance and we will continue to adapt to take that into account.”

Mike Solana, the founder of the tech publication Pirate Wires, told The Free Press that he believes the reason Gemini didn't work lies with Google's employees.

He told the outlet: “Let's be completely clear about how this happened: There are people working at Google who have psychotic political views that shape everything they do, and they were allowed to influence a product that is incredibly important to this company.”

“AI is really important to Google. It is currently one of their main concerns and main focuses. The fact that they screwed this up so badly probably indicates that the rot runs deep.”

Regarding the image generator, Solana added: “Aside from being funny, it's indicative of the kind of politics that has been allowed to shape features and products at Google.”

Solana added: “When it comes to what should be done about AI, the answer is: Don’t let technology leaders consolidate and centralize their power.”

“It's simply impossible to know how these people will manipulate us, so you have to assume that anyone running an AI will be biased to some degree.”