AI chatbots can poorly protect your personal information and expose your conversations. This had already been proven in the past with ChatGPT: Today it is Google Bard’s turn. His conversations were found indexed on Google for anyone to see.

Google Bard // Source: Frandroid

Google Bard // Source: Frandroid

Conversations between users and Google Bard have been published… on Google. For a while, the search engine indexed conversations whose users created a sharing link. Since then, everything has returned to normal, but there is a new controversy surrounding AI chatbots and privacy.

Conversations with Google Bard… in the wild

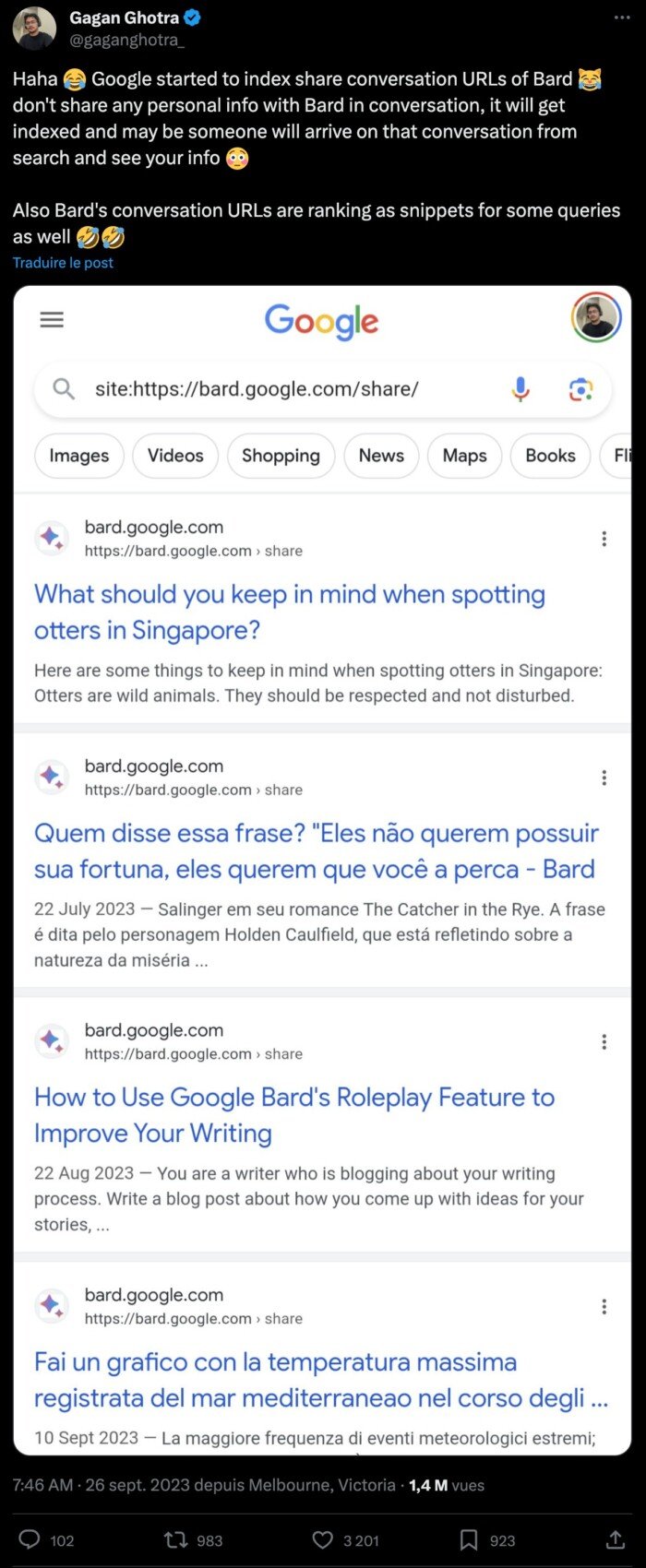

It was SEO consultant Gagan Ghotra who raised the alarm At (formerly Twitter) on September 26th. He posted a screenshot of a Google search and found all Google Bard conversations shared by users. However, the latter had never approved its indexing in a search engine.

In fact, you can use a Bard function to create a link that contains a conversation. It is useful for sharing your experiments with your loved ones or colleagues. In theory, we could use keywords to find conversations on specific topics and use their content to help identify those that generated Bard’s responses.

Source: Frandroid via

Source: Frandroid via

In response, the Google SearchLiaison account (dedicated to the search engine) responded. At that “Bard allows people to share threads if they want.” We also do not intend for these shared discussions to be indexed by Google Search. We are currently working to prevent them from being indexed. » Since then, the same search no longer leads to any page, so the error has been fixed.

This isn’t the first time chatbots have made a mistake

Last April, we learned that Samsung had learned of a leak of confidential information due to ChatGPT. The company’s engineers used the chatbot in their work. Measures were then taken to prevent these potential leaks. Samsung was doing well since OpenAI had temporarily shut down ChatGPT a month earlier, Siècle Digital reported. A bug allowed users to access other users’ conversation history. If you couldn’t see the content, you could see the name. Recently, in August, a bug allowed a random response from ChatGPT that came from another user’s conversation. ChatGPT’s protection of personal data was so problematic that Italy banned the service on its territory for a month before reauthorizing it.

Our recommendation is not to share personal information with chatbots: Google Bard, ChatGPT, Bing Chat and others. First, because the links for sharing discussions, whether on ChatGPT or on Bard, are public. This means that anyone can see the content via the URL address.